Ever wondered what actually happens inside your digital camera or smartphone when you press that shutter button? It seems like magic – point, click, and a perfect replica of the scene appears on your screen. But it’s not magic, it’s a fascinating process rooted in technology, and at its very heart lies the humble pixel. Understanding pixels is the first step to demystifying how digital cameras translate the light from the world around us into the images we cherish and share.

The Sensor: Your Camera’s Digital Retina

Forget film rolls. In the digital age, the core component responsible for capturing light is the

image sensor. Think of it as the camera’s electronic equivalent of the retina in your eye, or the digital version of a sheet of film. This sensor is a small, rectangular silicon chip, typically either a CCD (Charge-Coupled Device) or, more commonly these days, a CMOS (Complementary Metal-Oxide-Semiconductor) sensor. Its surface is the crucial area where the image formation begins. When light travels through the camera’s lens, it’s focused precisely onto this sensor.

The primary job of the image sensor is simple: to detect and record the light that forms the image projected onto it by the lens. It doesn’t inherently understand shapes or objects; it only understands light intensity. But how does it record this light in a way that can be turned into a picture?

Pixels: The Microscopic Building Blocks

This is where pixels come into play. The surface of that image sensor isn’t just one smooth piece; it’s covered in a grid of millions of microscopic, light-sensitive sites. Each one of these individual sites is called a

photosite, and each photosite corresponds to one

pixel (short for “picture element”) in the final digital image. Imagine a huge mosaic made of millions of tiny, single-colored tiles. Each tile represents a pixel, and collectively they form the complete picture.

When the camera’s shutter opens, light photons stream in through the lens and strike the sensor’s surface. Each photosite acts like a tiny bucket or well, collecting these photons during the exposure time. The brighter the light hitting a specific spot on the sensor, the more photons land in that corresponding photosite’s ‘bucket’. The number of photons collected directly correlates to the intensity of light at that point.

A photosite is the physical light-gathering cavity on the image sensor chip itself. A pixel is the smallest individual element of the final digital image, representing the color and brightness information derived from usually one (or sometimes interpolated from several) photosites. While closely related, they represent different stages: the physical capture (photosite) and the resulting digital data point (pixel).

So, at the end of the exposure, each photosite holds an electrical charge proportional to the amount of light it received. A photosite that received bright light holds a strong charge, while one that received dim light holds a weak charge. This grid of varying charges holds the raw information about the pattern of light and shadow that fell on the sensor – essentially, a grayscale map of the scene’s brightness.

Capturing a World of Color: Filters Step In

But wait, the world isn’t black and white! How do cameras capture color if the photosites only measure light intensity? This is achieved using a clever trick: a

Color Filter Array (CFA). Most digital cameras use a specific pattern called the Bayer filter array, which is placed directly over the grid of photosites.

The Bayer filter assigns a color filter – Red, Green, or Blue – to each individual photosite. The most common arrangement is a pattern of RGGB (Red, Green, Green, Blue) across every 2×2 block of photosites. This means:

- 25% of the photosites are filtered to primarily capture Red light.

- 50% of the photosites are filtered to primarily capture Green light.

- 25% of the photosites are filtered to primarily capture Blue light.

Why twice as many green filters? This mimics the human eye’s greater sensitivity to green light, allowing for perceived finer detail in the green channel, which contributes significantly to our perception of overall image sharpness and luminance.

So, when light hits the sensor, a photosite with a red filter only lets red light pass through (mostly), measuring the intensity of red light at that specific point. Similarly, blue-filtered photosites measure blue intensity, and green-filtered photosites measure green intensity. Now, instead of just a map of overall brightness, the sensor holds separate, incomplete maps of red, green, and blue light intensities across its grid.

From Analog Signals to Digital Numbers

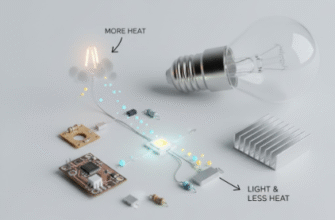

The electrical charge collected by each photosite is an analog signal – a continuous range of voltage levels. Computers, however, work with discrete digital data (ones and zeros). Therefore, the next crucial step is converting these analog charges into digital values. This is handled by an

Analog-to-Digital Converter (ADC).

The ADC measures the voltage level from each photosite and assigns it a numerical value. The precision of this conversion is determined by the camera’s

bit depth. For example, an 8-bit ADC can assign one of 256 different values (2^8) to the brightness level of each color channel for a pixel. A 12-bit ADC offers 4,096 levels (2^12), and a 14-bit ADC provides 16,384 levels (2^14). Higher bit depth means the camera can distinguish between more subtle variations in tone and color, leading to smoother gradients and potentially more detail in highlights and shadows.

It’s important to note that at this stage, the data is still ‘raw’. Each pixel location only has definitive brightness information for *one* color (the color of its filter). The full color information for every pixel has yet to be constructed.

This collection of digital numbers, representing the brightness value for Red, Green, or Blue at each specific pixel location according to the Bayer pattern, is essentially the raw image data.

Image Processing: Building the Final Picture

This raw data, straight from the ADC, isn’t something you’d typically look at. It’s a mosaic of single-color values. To create the full-color image we expect, the camera’s internal image processor (or software on your computer if you shoot in RAW format) performs a series of complex calculations.

The most critical step here is called

demosaicing or debayering. This sophisticated process intelligently interpolates, or essentially makes highly educated guesses, to fill in the missing two color values for each pixel. It looks at the values of neighbouring pixels to figure out what the Red, Green, and Blue values should be for *every single pixel* location. For a pixel under a Red filter, it estimates the Green and Blue values by looking at the actual Green and Blue values captured by nearby pixels. This creates a full-color representation where every pixel has its own Red, Green, and Blue brightness value.

Beyond Demosaicing

But the processing doesn’t stop there. The camera’s processor performs several other adjustments to enhance the image:

- White Balance: Adjusts the overall color cast to make white objects appear white under different lighting conditions (e.g., sunlight vs. fluorescent light).

- Noise Reduction: Reduces random speckles or grain (noise) that can appear, especially in low light or at high sensitivity settings (ISO).

- Sharpening: Enhances edge contrast to make the image appear crisper.

- Color Correction & Saturation: Adjusts the vibrancy and accuracy of colors based on preset profiles or user settings.

- Compression: If saving as a JPEG (the most common format), the processor compresses the image data to create a smaller file size, which involves discarding some information (lossy compression). Shooting in RAW format skips this compression step, preserving all the original sensor data.

After all these steps, the final data is organized and saved as an image file (like a JPEG, TIFF, or the camera’s RAW format) onto your memory card.

Megapixels Explained

You constantly hear about megapixels (MP) – millions of pixels. A 24MP camera has roughly 24 million photosites on its sensor, resulting in an image composed of 24 million pixels. More pixels generally mean the camera can capture finer detail. Think back to the mosaic analogy: more tiles allow for a more intricate and detailed picture.

Higher megapixel counts allow for larger prints without losing quality and give you more flexibility for cropping images later without them becoming blurry or pixelated. However, cramming more and more pixels onto the same-sized sensor can sometimes have downsides, potentially leading to smaller individual photosites that might capture less light and generate more noise, especially in lower light conditions. Sensor size itself plays a huge role here; a 24MP full-frame sensor (larger physical size) will typically outperform a 24MP smartphone sensor (much smaller physical size) in terms of light gathering and noise performance, because its individual pixels (photosites) are much larger.

Megapixels indicate the resolution or the total number of pixels in an image, influencing potential detail and print size. However, image quality is a complex balance involving not just pixel count, but also sensor size, lens quality, the effectiveness of the image processor, and the bit depth of the analog-to-digital conversion. Don’t judge a camera solely by its megapixel count.

So, the next time you snap a photo, remember the incredible journey that light takes. From photons hitting millions of tiny, filtered buckets on the sensor, being converted into digital signals, and undergoing complex processing – all driven by the fundamental concept of the pixel – to finally become the digital image you see. It’s a remarkable blend of physics and computation happening in the blink of an eye.